The phrase “The Ethical Conundrum of Scaling up Generative AI” refers to the ethical dilemma and complex issues that arise when expanding the use and capabilities of generative artificial intelligence (AI). Generative AI, such as language models like GPT-3, has the ability to generate human-like text based on the input it receives.

The term “scaling up” implies increasing the size, scope, or impact of generative AI systems. This can involve deploying them in various applications, utilizing them in larger contexts, or enhancing their capabilities. The ethical conundrum arises because the deployment of powerful AI systems has the potential to impact various aspects of society, including privacy, security, bias, misinformation, and job displacement.

When ChatGPT was launched, the globe was thrown into a frenzy of conflicting emotions. This innovative tool was another example of how we should marvel at the fact that humans truly are the forerunners of scientific and technical evolution. One sect stated that this invention would have little to no influence on their existence. People quickly came up with their explanations, with some in awe of it, appreciating its benefits, and starting to use it for the amazing and improved efficiency it offers to both individuals and businesses. While another faction was unsatisfied with how it would eventually replace them and should be banished. Yet history has shown that humans can maximize technology’s potential such as, machine learning, artificial intelligence, natural language generation that allowing both to survive and thrive.

When we were exposed to generative AI, a knowledge gate of limitless possibilities for text-based inquiries for improved deep learning and productivity opened up. Generative AI altered the way we work, live, and interact. Now that means that in 2024, nearly half of consumers around 49% says they trust AI-powered interactions with businesses, compared to 30% in previous years. We clearly reached a tipping point where possibilities included dynamic research, developing and assisting with research, brainstorming ideas, publishing articles or emails, accessing summary information, and presenting complex issues for teaching and training.

With the advent of generative AI, R&D was forever altered. Instead of links to other sources, the customers are given direct responses derived from a range of data. It began to feel as though we were conversing with a smart machine.

But is the long-awaited question finally here? When firms are on the cusp of investing millions and billions of dollars to scale up AI’s generative skills and employ them for more sophisticated and exponential growth, does this not suggest that scaling up will consume jobs? However, a new AI innovation in 2024 advocates affirming something else.

Generative AI: What is it?

Generative AI is a machine learning technique that employs natural language processing (NLP) to answer nearly any inquiry posed by the user. It enables astonishingly human-like user interactions by utilizing massive volumes of internet data, large-scale pre-training, and reinforced learning. Reinforcement learning from human feedback (RLHF) is applied, which adapts to diverse contexts and situations over time and becomes more accurate and natural. Marketing, customer service, retail, and education are among the application cases being investigated with generative AI. However, one of the most significant advances in Generative AI is the ability to train using various learning strategies, ranging from unsupervised or semi-supervised learning.

OpenAI’s Prodigy The ChatGPT

ChatGPT is an artificial intelligence chatbot created by OpenAI, a natural language processing tool powered by AI technology that allows users to have human-like chats with the chatbot and much more. OpenAI’s Generative Pre-trained Transformer (GPT) language model architecture is the basis for the functioning of ChatGPT. Since its inception, ChatGPT has taken the internet by storm, encouraging users to envision a plethora of use cases for the model.

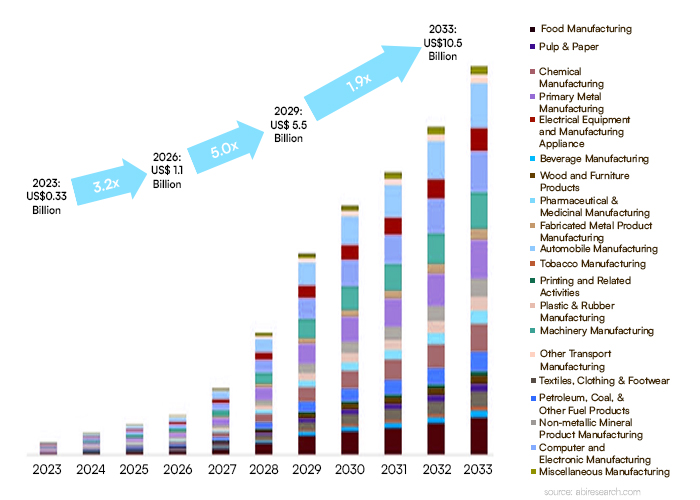

Although ChatGPT was the first, several rivals now use a deep learning architecture known as the Transformer, which represents a significant advancement in the Natural Language Processing (NLP) industry. Despite the fact that OpenAI is in the lead, competition is escalating. According to Precedence Research, the global generative AI market is expected to grow at a 27.02% CAGR between 2023 and 2032, reaching a value of USD 10.79 in 2022 and USD 118.06 by 2032. Although everything above here seems fantastic, there are some limitations.

The Challenges of Scaling Generative AI

The ability of generative AI models to produce polished, captivating, and expert-level outputs makes them valuable assets across a range of industries. You should know that the latest 2024 generative AI algorithms can optimize the accuracy and efficiency of existing cutting-edge AI technology such as computer vision and natural language processing. Forward-thinking leaders who grasp the need to embrace this disruptive technology appreciate its ability to promote corporate success or even determine an organization’s existence. However, realizing the full potential of generative AI models requires large-scale deployment, which offers significant hurdles.

• The Operational Cost Challenge

Generative AI models use more power than non-generative models since they are larger and more complex. All estimates suggest that GPT-4 has at least five times as many parameters as GPT-3, which has 175 billion parameters.

Inference requires multiple forward passes over the model to generate rapid answers, which increases the computing burden. Inference and training costs skyrocketed. To put it in perspective, ChatGPT’s expected training cost is $4–5 million, and recent research shows that at its current scale, its inference cost is $700,000 per day.

Prompt length impacts Transformative generative AI cost in 2024. Longer prompts and context windows increase model accuracy, personalization, and performance for varied applications. However, they increase inference costs.

• The Sustainability Challenge

Scaling generative AI systems presents additional difficulties due to their unique energy usage and ecological issues. Generative AI, like numerous other forms of AI-driven creativity, raises significant environmental concerns due to its high energy requirements. Recent studies have revealed how much the Information and Communications Technology (ICT) industry contributes to the estimated 2% to 4% of global greenhouse gas emissions. Today’s massive generative AI models have a large carbon footprint because of their energy-intensive nature, which only becomes worse as they get bigger and more complex.

How can you swiftly and responsibly scale generative AI?

As the outcomes of next-generation AI algorithm insights become more business-critical and technology possibilities proliferate, you must have confidence that your models are performing ethically, with transparent methodologies and explainable results. Organizations that proactively incorporate governance into their creative AI initiatives can increase model identification and mitigation while also increasing their capacity to meet ethical norms and government rules. As organizations across industries understand the potential of generative AI to change their operations and drive revenue development, the issues connected with the operational expenses and environmental effects of growing computing creativity and generative AI applications emerge.

How to Overcome Scaling Issues with Generative AI Models?

A multifaceted strategy is needed to lower the operational and environmental expenses related to growing generative AI applications. The three promising routes are explored below.

• Building Specialized Models

Making generative models smaller and more computationally efficient without sacrificing performance is now a hot topic in the field of generative AI. Examining the development of specialized models optimized for particular activities and applications is one interesting technique. These models may be trained on fewer datasets and are intended to be more computationally efficient. Advanced cloud-based services will deliver scalable computing resources on demand, allowing organizations to make use of the power they need without investing in expensive hardware.

Think of huge, all-encompassing models as “Renaissance scholars,” with a broad, potentially surface-level knowledge base. Specialized models, on the other hand, can be compared to “modern-day experts,” honing in-depth knowledge within a particular topic. While Renaissance scholars had their virtues, contemporary experts’ insights are frequently what provide solutions to today’s most pressing problems. In the same vein, larger models may not always offer the most effective or customized answer for every situation, despite their impressively broad output capabilities.

Scaling generative AI applications can be difficult, however, adopting specialized models has advantages in inference speed and cost. We can unlock the potential for more affordable, enduring, and cutting-edge solutions in the field of generative AI by concentrating on creating specific models.

• Inference Acceleration through Quantization and Compilation

Techniques like quantization and compilation can optimize generative models in addition to creating customized models, lowering costs, and fostering sustainability.

By lowering the precision of numerical quantities in the model, quantization minimizes memory and computing needs while maintaining performance. Both specialized and general generative models benefit greatly from this technique’s large reduction in inference costs.

A neural network’s abstract representation, which is frequently represented in terms of high-level operations or layers, is converted into a form that can be effectively implemented on a particular kind of hardware (such as a CPU, GPU, or dedicated AI accelerator) through the process of compilation. It entails techniques like operator fusion that lower the model’s computational complexity, resulting in faster inference and lower latency.

Greater scalability for generative AI applications is made possible by faster inference. The necessary GPU processing time per query falls as a model’s processing speed increases, which lowers the cost of running a query on the cloud. For every dollar spent on computer resources, generative AI applications that use models with faster inference capabilities can support higher consumption.

Furthermore, generative AI applications have a smaller carbon footprint thanks to faster inference. Reduced kW consumption per hour results in decreased GPU processing time, which lowers CO2 emissions.

Generative AI: The risky business and the concerns with off-the-shelf generative AI models

When using pre-built, commercially available generative AI-driven innovation in business models, there are certain important problems. Each firm must strike a balance between the risks involved and the opportunity for value generation. Depending on the business and the use case, organizations may discover that constructing in-house or collaborating with a dependable partner may produce superior outcomes if risk tolerance is low.

• Internet data is not always fair and accurate.

Today, enormous amounts of data from sources such as Wikipedia, websites, articles, image or audio files, and so on are at the center of most generative AI. To create content, generative models match patterns in the underlying data, and without safeguards, bad intent can push disinformation, bigotry, and online abuse. Due to the novelty of the technology, there is occasionally a lack of accountability as well as increased exposure to reputational and legal risk in areas like copyrights and royalties. Most relevant one is deepfake technology, which includes deepfake videos, deepfake images, and deepfake audios created by AI algorithms that are difficult to tell apart from the real thing, leading to abuse and false propaganda.

• There can be a split between model developers and all model use cases.

Downstream generative model creators may not realize the full scope of how the model will be utilized and changed for different uses. This can lead to incorrect assumptions and outcomes, which are not critical when errors involve less important decisions such as selecting a product or service, but critical when affecting a business-critical decision that may expose the organization to accusations of unethical behavior, including bias, or regulatory compliance issues that can lead to audits or fines.

• Litigation and regulation impact use.

Today regulatory frameworks applicable to generative AI today are emerging and rapidly evolving. Concerns about litigation and regulations will limit how major firms adopt generative AI at first. This is especially true in highly regulated industries like financial services and healthcare, where the tolerance for unethical, biased decisions based on incomplete or erroneous data is very low and models can have negative consequences. The regulatory framework for generative models will eventually catch up, but organizations must be proactive in complying with it to prevent compliance violations, reputational harm, audits, and fines.

The Need for Generative AI Optimization and Scaling Solutions

AI driven Businesses can successfully lower the operational and environmental expenses related to scaling generative AI models by putting the aforementioned strategies into practice. However, it requires a high level of technical competence to construct specific generative models and put optimization approaches into practice. Even for seasoned machine learning experts with strong deep learning backgrounds, the process can be time-consuming. To reduce risks, accelerate development cycles, prevent missing market opportunities, improved customer engagement and maintain competitive advantages, businesses need quick and effective solutions.

It is important to take the following factors into careful consideration while scaling generative AI for business:

• Use Cases

Businesses can employ generative AI for a range of use cases, including enhancing the customer experience, boosting operational effectiveness, providing new product features, and enhancing worker capabilities. It is crucial to pinpoint the precise use cases that are pertinent to the organization and compatible with its objectives.

• Data Quality

To generate accurate outputs, generative AI models need a lot of high-quality data. This includes removing errors from data, organizing it in a way that AI can understand, and ensuring that it is diverse and accurate. It is crucial to guarantee that the data used to train the models is impartial and representative of the business.

• Ethical Considerations

Generative AI can cause ethical issues, such as the possibility that the models will create objectionable or harmful content. As well as create other business risks such as threat to customer privacy, job relocation, data theft, misinformation, etc. It’s crucial to establish moral standards and make sure that models are created and used appropriately.

• Environmental Impact

It can be expensive and have a negative effect on the environment to scale generative AI models. The most significant environmental impact of AI is energy consumption and an increase in e-waste. To reduce the impact, it is crucial to investigate effective scaling strategies and affordable solutions.

• Proficiency

Generative AI demands specific knowledge in fields like software engineering, data science, and machine learning. It’s crucial to have a team with the knowledge and expertise needed to design, create, and use generative AI models.

Businesses can safely grow enhanced generative AI intelligence and realize its potential to revolutionize how they operate and engage with customers by properly taking into account these elements. Using generative AI in business in 2024, entire markets will be reinvented by altering business practices and methods of labor. According to research, AI might help the world economy grow to $15.7 trillion by 2030.

With the right resources and experience, generative AI models have emerged as a transformational force that can produce human-like language, graphics, and code with astonishing precision. This feature can bring about a significant change that enables organizations to grow enormously.